In part of one of this series I went down the path of Splunking game data from a 2 day, single challenge event. In this installment I'm going to dive into a bit of data from an event that encompassed several different challenges.

In looking back at the dashboard I created, how I was working with some of the data, and the sheer volume of data across all of the challenges - the dashboard itself might not have much value from a game analytic perspective lol. I also realized how much I focused on one challenge - waves. In looking back I think that was largely due to the numbers being smaller. The approaches to displaying the data will work across all of the events so at some level it would be a lot of copy and paste as well. At any rate hopefully folks will take something away from the underlying queries and some of the challenges with trying to display the data/volume of data. TBH this is the more primary focus of this overall effort.

Data was captured across 4 challenges, 3 player level brackets, and listed scores for the top 100 positions (other than the clan boss fight).

- Waves - 5 separate groups of mobs attack your heroes

- Pacifism - multi-level, player vs environment (PvP) track where you couldn't bring damage dealers

- No Healers - multi-level, PvE track where you couldn't bring a healer

- Boss - big bad dude that everyone in the clan attacked

The brackets, from top to bottom, is Expert, Advanced, & Intermediate. So here is the dashboard...

So before I could start making the dashboard I needed to coalesce the data. I wanted to have one master sheet for each challenge but each challenge bracket was in a separate lookup (way to use csv data in Splunk) and each sheet only had 1 column - the score. The merging of this data could easily be done outside of Splunk but the methods here might prove useful to someone.

The first attempt was pretty manual - pull the bracket info up, add a column that added their finish position, add a column that indicated what bracket I was adding, pull up the master sheet for that event challenge, and then write out the updated csv. In this case I'm adding the advanced waves bracket to the master waves file.

| inputlookup waves_a_orig.csv

| streamstats count as finish_position

| eval bracket = "Intermediate"

| inputlookup mm1_waves.csv append=t

| outputlookup mm1_waves.csv

The data is already sorted by highest position at the top and then works its way down. Streamstats allows you to do streaming statistical functions that span one or more events. In this case it starts at the first event and performs a running count of how many events there are. This is a great way to enumerate finish position - the first line finished first and is one event - write that out. The second line shows who finished second and the count of events is now 2 - write that out, etc.

So while this is effective it isn't exactly quick. What about using the append command? Yep works a treat! Append does what you think. You run a search, run another search, the results of the second search are added to the end of the first search's results. In this case the data had a column named "Score" that I wanted to make lower case.

| inputlookup pacifism_i_orig.csv

| streamstats count as finish_position

| rename Score as score

| eval bracket = "Intermediate"

| append

[inputlookup pacifism_a_orig.csv

| streamstats count as finish_position

| eval bracket = "Advanced"

| rename Score as score]

| append

[inputlookup pacifism_e_orig.csv

| streamstats count as finish_position

| eval bracket = "Expert"

| rename Score as score]

| output lookup mm1_pacifism.csv

So that out of the way let's look at the dashboard panels

1 - Waves Top 5 Finishers

Go back and look at this one - it sucks right? This is an example of a query attempt that didn't turn out like I had hoped so I left it in. The dashboard creation process is often iterative /shrug. I wanted to have the top 5 finishers in each bracket for the wave event and show the wave level they finished on in a cascading fashion. It didn't turn out as well as I had hoped. It does show the cascade but you can't really discern much else. Unlike the queries from last time I did use a where statement with the initial pulling up of the csv to only return those records where the person finished in the top 5 positions.

| inputlookup mm1_waves.csv where finish_position <=5

| sort -wave

| fields - finish_position

Going back after the fact a slightly better way to do this is with the chart command. The following search gets you the result that follows

| inputlookup mm1_waves.csv where finish_position <=5

| chart count by wave bracket

| sort -wave

In this case the results are showing the top 5 finishers in each bracket then how many people finished at that wave level. In other words in the Expert bracket (green) finishers 3 - 5 all finished on the same level.

2 - Waves Pushed or Not

So this waves event threw 5 groups mobs at your hero party, one wave at a time, for a certain amount of game energy. You got credit for the number of waves you defeated. A clan mate noted that for most folks if they are "pushing" their team their scores would likely not end in a 0 or 5. If a person's score ended in one of those numbers it was likely the player had simply finished that series of mobs and then didn't return to the game, or that event, for one reason or another. The data does sorta bear out that hypothesis but I'd argue only on the lower end of the brackets (again only the top 100 scores in each bracket were captured). In my last post I mentioned not really liking pie charts - this is a good example of why. It shows some high level information across a lot of data. The eval if statement is a simple way to determine if the wave ended in a 0 or 5 allowing the calculation by that created field. Incidentally doing if and case statements is one of the tremendous value ads within Splunk.

| inputlookup mm1_waves.csv

| eval foo = if(wave LIKE "%0" OR wave LIKE "%5", "Coasted", "Pushed")

| stats count by foo

3 - Pushed or Not - More Pie!

So while still pie graphs this at least breaks down the data by bracket allowing a bit more insight. In many cases as you develop a dashboard you'd start out at a macro level and get more granular as you go. You could also do something like make this dashboard panel, "hide" it, and if a user clicks on the first graph this panel pops out. Lots of options. This panel also uses the Trellis layout do show all three brackets

| inputlookup mm1_waves.csv

| eval foo = if(wave LIKE "%0" OR wave LIKE "%5", "Coasted", "Pushed")

| stats count by bracket foo

4 - Waves Pushed or Not by Bracket

More waves info! Again part of the goal with this effort is to figure out what queries/panels make sense. Some of these are more viable depending on how you are presenting the data. Is it going to be in Splunk, going to be displayed on a TV, on a PDF, etc. In this case I wanted to dive a bit more into the coasting or pushed across waves folks completed and did anything stand out. So here are some observations (that are maybe harder to discern in the screenshot. Remember these are people in the top 100 finishing positions.

The screenshot likely doesn't lend itself well for analysis. As you increase the scope of the data you are looking at though this sort of bar graph setup can be a good way to wrap your head around it. Of course in Splunk as you highlight an item on the legend or the graph itself the rest of data fades out a bit making it easier to tease out some nuances.

When using the chart command to essentially convert the data into an xy series you can only do that across 2 data dimensions. So what happens where you want to display a third. I wanted to see pushed or coasted by bracket and show what levels folks landed on. The trick is to use an eval to combine the two fields.

| inputlookup mm1_waves.csv

| eval foo = if(wave LIKE "%0" OR wave LIKE "%5", "Coasted", "Pushed")

| eval bar = bracket.": ". foo

| chart count by wave bar

5 - Wave: Finish Deltas

One of the things I was curious about was how much did the top finishing positions actually finish by. This calls for a more complex use of streamstats

| inputlookup mm1_waves.csv where finish_position <= 5

| sort bracket finish_position

| streamstats window=1 current=false max(wave) as previous by bracket

| eval foo = previous - wave

| eval foo = if(isnull(foo), 0, foo)

| chart list(foo) by finish_position bracket

| table finish_position Intermediate Advanced Expert

So let's start with the top 5 positions. Ultimately what we want to do is compare the wave finish level of any given finisher to finish level of the person who finished just before them. To do that we first need to sort by bracket and then by finish level. Streamstats needs to be structured such that we want to use just one event (window=1) and NOT use the current event (current=false). We will put that data into a field called "previous" allowing us to do the math (eval statement). Then since we only are getting the top 5 the 5th position doesn't have anything to compare against so let's just put a 0 in that slot.

Two issues with this. The first is the resulting data doesn't "read" well - ie the second place finisher in the intermediate bracket lost by/was behind by 13 levels. The other thing is what about pulling in the data from 1 more finish position than you are going to display which allows you to calculate what that last displayed set actually won by. So...

6 - Waves: Finish Deltas 2

| inputlookup mm1_waves.csv where finish_position <= 6

| sort bracket -finish_position

| streamstats window=1 current=false max(wave) as previous by bracket

| eval foo = abs(previous - wave)

| search finish_position <= 5

| chart list(foo) by finish_position bracket

| table finish_position Intermediate Advanced Expert

There are 3 changes. The first is to pull out the top 6 finish positions; then display the top 5. The second is sort in reverse order. You are still comparing finishing levels but by sorting it this way vs the way in the 5th panel the data can be displayed as "the 1st place finisher in Intermediate won by 13 levels". Now because I didn't change the subtraction though the number would be negative. Simple enough to do "waves - previous" but might as well show something else. Get the absolute value of the change; removes that negative. To help understand what the data looks like with the streamstats command output looks take a look at the following. This uses the query up until it gets to the eval foo line.

Which for reference ultimately is displayed as

For the next 3 panels I wanted to show the curve of relative placement across the rest of the individual challenges using different visualizations.

7 - Top 10 Wave Finishes

| inputlookup mm1_waves.csv where finish_position <= 15

| chart max(wave) by finish_position bracket

| table finish_position Expert Advanced Intermediate

8 - No Healers Top 15 by Bracket

| inputlookup mm1_no_healers.csv where finish_position <= 15

| chart max(score) by finish_position bracket

| table finish_position Expert Advanced Intermediate

9 - Pacifism - Top 5

| inputlookup mm1_pacifism.csv where finish_position <= 5

| chart max(score) by finish_position bracket

| table finish_position Expert Advanced Intermediate

10 - Waves: Clan Finishes

So yes....more waves lol. In this case though individual waves completed added to your clan's total. What I like about this, like the last blog installment, is showing overall clan scores, how many members are in the clan, and how many people contributed. Once again - if folks are in clans outside the top 7 or 8 they might be well served by thinning the ranks...or doing a merge of active players. The query is straight forward since the of all the data in this csv I don't really want to display the finish position.

| inputlookup mm1_waves_clan.csv

| fields - finish_position

11 - Waves: Clans by Member/Contributor

One of the things to look at across this data is take a look at the average number of waves a clan member contributed. And to do something a bit different I threw in some color based on the scale of numbers. If you look closely the color can help show SpawnsofDiablo, 3rd place finisher, had a slightly higher average wave finish per player but had 2 fewer members than Assassins who finished 2nd. That likely isn't THE reason for their respective clan finish positions but it does contribute to the story. Again the use of color can help pull some of those subtleties out here and in other datasets.

| inputlookup mm1_waves_clan.csv

| eval avgScore_Contributor = Score/Contributors

| eval avgScore_Member = Score/Members

| foreach avg*

[eval <<FIELD>> = round(<<FIELD>>) ]

| fields Clan Members Contributors avg*

Since I'm displaying numbers vs showing them on a graph I want to round the averages to the nearest integer. I can do that with 2 evals but let's try something more fun - a looping eval function. I'm saying for any field that starts with "avg", in this case avgScore_Contributor and avgScore_Member, round any numbers in those fields.

12 - Clan Boss Scores

The last event type is one where everyone in the clan beats up on a couple big boss mobs. Since I have that data in one file this is just a matter of displaying the scores. I also wanted to display members vs contributors in a different fashion than on the waves event. What is very interesting is the step down nature of the clan results: 1 to 2, 4 to 5, 5 to 6, 15 to 16, and even 21 to 22. All of this data is manually collected which is a shame - it would be interesting to dive into all of the pieces that contribute to why those drops exist.

| inputlookup mm1_clan_boss.csv

| table finish_position Score

13 - Clan Boss Participation

Interesting to me is this is probably the first time I've used an area chart where I have the data elements overlap vs stack. I often see overlapping area charts for things like memory or cpu across multiple systems which has, IMO, somewhat limited usability. At any rate in this case it helps showcase the slope of clan members participating in this event. Unfortunately the data doesn't tell you how many times someone fought the boss; just their aggregate contribution. There is value in looking at this panel by itself but also looking up and down comparing activity vs total clan scores.

| inputlookup mm1_clan_boss.csv

| table Clan Contributors Members

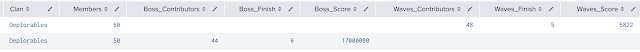

14 - Overall Clan Performance

We've FINALLY made it to the end. If you think this was a long read you'd be surprised how long it took me to write lol.

So the first thing to mention is you will want to install the Parallel Coordinates app from Splunkbase to get this visualization. I'm pulling in the top 20 clan finish positions (because those are the only clan names I wrote down) and then limiting the results to just those where the clan placed in the top 20 for both clan events. 18 clans fit that description. The lines in the screenshot are light colored and fairly hard to see. This visualization can be great though to get your arms across larger, multi-dimension data sets to see where things converge or diverge. There is also the ability to select a range on one or more Y axis which highlights those lines within those ranges.

| inputlookup mm1_waves_clan.csv

| head 20

| rename Contributors as Waves_Contributors Score as Waves_Score finish_position as Waves_Finish

| append

[| inputlookup mm1_clan_boss.csv

| head 20

| rename Contributors as Boss_Contributors Score as Boss_Score finish_position as Boss_Finish]

| stats max(Members) as Members list(Waves_*) as Waves_* list(Boss_*) as Boss_* by Clan

| table Clan Members Waves_Finish Waves_Contributors Boss_Finish Boss_Contributors

| sort -Clan

| search Boss_Finish=* Waves_Finish=*

Pull in the first set of data, limit the results to the top 20, and then rename the fields to distinguish one event from the other. Then append the second clan event's data; same methodology. So now we have an issue - data for any given clan is spread over two rows

One way to deal with that is to do a stats command where you get the values for any given field and split by the clan name. A little trick you can do when you have fields that have the same stem or end is to use wildcards like I've done. The last real thing I'm doing is I want to display just those clans who finished in the top 20 across both events; thus the finish search command.

So there you go! Hopefully this gives you a few examples of searches against perhaps some non standard data. Of course with Splunk data is simply data and all of it is queryable.

As I've alluded to data collection for this was manual. Thanks to LoucoReiPirata and jopan for their help!

No comments:

Post a Comment